The AR industry is improving devices’ understanding of our environment and making AI assistants even more personal, but for that, several issues must be addressed to achieve a compact form factor, all-day performance, and understanding of user context. Solutions like VoxelSensors’ SPAES technology for spatial sensing and eye tracking, and PERCEPT, the company’s AI-driven contextual analysis platform, are essential.

SPAES (single photon active event sensor) 3D sensing, developed by VoxelSensors, is a breakthrough technology that solves current critical depth sensing performance limitations for robotics applications. The SPAES architecture addresses them by delivering 10x power savings and lower latency, maintaining robust performance across varied lighting conditions. This innovation is set to enable machines to understand both the physical world and human behaviour from user’s point-of-view, advancing Physical AI.

On our way to the future elegant AR glasses with a human-like understanding of the user and the context, we just achieved another significant milestone. We are now collaborating with Qualcomm to integrate SPAES with the Snapdragon AR2 Gen 1 Platform to enable high-performance XR experiences with significantly reduced power consumption. This step forward supports the development of AR devices that are more compact, efficient, and scalable.

Intelligent perception in AR depends on the quality of the sensor systems behind it. Together with Qualcomm, we are helping devices better understand and respond to the world around them from each user’s unique point of view.

Solving critical AR industry challenges

Current AR devices face significant limitations that VoxelSensors’ technology addresses. Today’s spatial mapping sensors struggle across different lighting conditions. Structured-light systems fail in bright environments, time-of-flight sensors lack fine detail precision, and stereo vision fails on textureless surfaces. VoxelSensors’ active event-based sensors offer ultra-low latency and power consumption, while maintaining robust performance across various environmental conditions, enabling reliable contextual data collection in real-world scenarios.

Founded in Brussels, Belgium, in 2020, VoxelSensors is dedicated to advancing sensing technology for spatial and empathic interfaces. The company’s proprietary SPAES and PERCEPT technologies enable ultra-low power, ultra-low latency 3D perception, eye tracking, and contextual intelligence for next-generation XR and industrial applications.

Introducing a novel sensing modality: Active Event Sensor

Active Event Sensor (AES) is a new kind of sensor architecture that provides asynchronous binary detection of events when viewing an active light source or pattern.

VoxelSensors’ active event sensor does not acquire and output frames. Instead, each pixel is smart and generates an event only upon detection of the active light signal. Each position sample requires approximately 10 photons on average, with sample rates up to 100 MHz. In other words, an active event location in the image plane is obtained up to every 10 ns.

Key patented technologies implemented in the Single Photon enable ambient light rejection, even in bright ambient conditions.

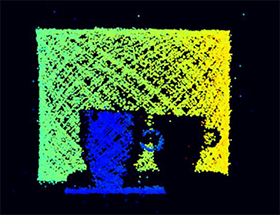

AES enables 3D sensing using laser beam triangulation

VoxelSensors’ novel 3D perception solution is a serialised triangulation system. Instead of searching for features that may be hard to see or even non-existent (passive and active stereo), or inferring depth from the deformations of a complex light pattern by stereo matching (structured light), the depth reconstruction is as simple as triangulating corresponding events between sensors by matching the timestamps. This simplicity greatly reduces the latency and power required during the computing step.

1. A Laser Beam Scanner (LBS) uses a scanning device (e.g., a bi-axial MEMS mirror) to project a laser beam dot in a continuous pattern, such as a raster scan or Lissajous pattern.

2. Two AES sensors capture the position of the laser dot up to every 10 ns and output the location of the active dot in their respective image planes in an address event representation format (AER).

3. Based on the two continuous AES position streams, a simple triangulation algorithm computes the corresponding 3D point and outputs its position in world coordinates in the 3D space.

The output of this laser beam triangulation system is a stream of serialised 3D points, or voxels, with a new voxel added up to every 10 ns. The dynamic nature of the data stream unlocks new possibilities in computer vision, allowing for pipelined processing and customised perception schemes.

Benefits of this technology include low power consumption, ideal for battery-powered applications, low latency, and immunity to light pollution.

For more information visit www.voxelsensors.com

© Technews Publishing (Pty) Ltd | All Rights Reserved