AI has undergone a huge change in the past ten years. In 2012, convolutional neural networks (CNNs) were the state of the art for computer vision. Then around 2020, vision transformers (ViTs) redefined machine learning. Today, Vision-Language Models (VLMs) are changing the game again – blending image and text understanding to power everything from autonomous vehicles to robotics to AI-driven assistants.

This introduces a major problem at present: most AI hardware isn’t built for this shift. The bulk of what is shipping in applications like ADAS is still focused on CNNs never mind transformers. VLM? No.

Fixed-function Neural Processing Units (NPUs), designed for yesterday’s vision models, can’t efficiently handle VLMs’ mix of scalar, vector, and tensor operations. These models need more than just brute-force matrix math. They require:

• Efficient memory access – AI performance often bottlenecks at data movement, not computation.

• Programmable compute – Transformers rely on attention mechanisms that traditional NPUs struggle with.

• Scalability – AI models evolve too fast for rigid architectures to keep up.

AI needs to be freely programmable. Semidynamics provides a transparent, programmable solution based on the RISC-V ISA with all the flexibility that provides.

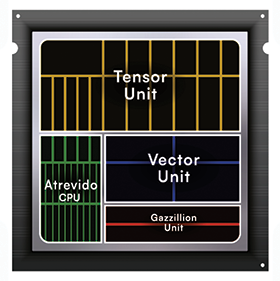

Instead of forcing AI into one-size-fits-all accelerators, you need architectures that let you build processors better suited to your AI workload. Semidynamics’ all-in-one approach delivers all the tensor, vector and CPU functionality required in a flexible and configurable solution. Instead of locking into fixed designs, a fully configurable RISC-V processor from Semidynamics can evolve with AI models, making it ideal for workloads that demand compute designed for AI, not the other way around.

VLMs aren’t just about crunching numbers. They require a mix of vector, scalar, and matrix processing. Semidynamics’ RISC-V-based all-in-one compute element can:

• Process transformers efficiently handling matrix operations and nonlinear attention mechanisms.

• Execute complex AI logic efficiently without unnecessary compute overhead.

• Scale with new AI models, adapting as workloads evolve.

Instead of being limited by what a classic NPU can do, these processors are built for the job and are fixing AI’s biggest bottleneck: memory bandwidth. It is well-known by engineers working in AI acceleration - memory is the real problem, not raw compute power. If a processor spends more time waiting for data than processing it, efficiency is being lost.

Semidynamics’ Gazzillion memory subsystem is a game-changer in AI acceleration applications for the following reasons:

• Reduces memory bottlenecks as it feeds data-hungry AI models efficiently.

• Smarter memory access allows the system to cope with slow, external DRAM by hiding its latency.

• Dynamic prefetching minimises stalls in large-scale AI inference.

For AI workloads, data movement efficiency can be as important as FLOPS. If hardware is not optimised for both, performance is being left on the table.

AI shouldn’t be held back by hardware limitations. That’s why RISC-V processors like Semidynamic’s all-in-one designs are the future, and yet most RISC-V IP vendors are struggling to deliver the comprehensive range of IP needed to build VLM-capable NPUs. Semidynamics can provide fully configurable RISC-V IP with advanced vector processing and memory bandwidth optimisation, giving AI companies the power to build hardware that keeps up with AI’s evolution.

If your AI models are evolving, why is your processor staying the same? The AI race won’t be won by companies using generic processors. Custom compute is the edge AI companies need.

For more information visit www.semidynamics.com

© Technews Publishing (Pty) Ltd | All Rights Reserved